So, uhhh…

Google engineer Blake Lemoine who believes an AI has become sentient joins Tucker Carlson:

"At the end of the day, it's just a different kind of person." pic.twitter.com/z4GiRK8utM

— The Post Millennial (@TPostMillennial) June 23, 2022

On Wednesday, Fox News host Tucker Carlson interviewed a silly fat guy from Google who was suspended from work for leaking conversations with a “sentient” AI.

On 6 June 2022, Google placed one of its engineers, Blake Lemoine, on paid administrative leave. The reason? According to Google, Lemoine, who works for the company’s Responsible AI organisation, broke its confidentiality policies when he claimed that the Google AI chatbot model named LaMDA has become sentient — a state where an object is able to perceive and feel emotions such as love, grief and joy.

Lemoine’s primary role as a senior engineer was to find whether LaMDA generates discriminatory language or hate speech. While doing so, Lemoine says that interactions with the AI-powered bot led him to believe that LaMDA is sapient and has feelings like humans.

The engineer’s ‘claim’ rocked the world of science ever since The Washington Post broke the story on 11 June, with a debate on whether LaMDA has indeed gained sentience or is it a carefully constructed illusion that trapped Lemoine into believing in the AI bot’s sapience.

Having said that, this is not the first time that Google has removed an AI scientist from its team. In 2020, the company drew criticism when it fired prominent AI ethicist Timnit Gebru after she published a research paper about known pitfalls in large language models. Gebru was included in TIME magazine’s 100 Most Influential People in the World list of 2022.

Lemoine hasn’t been fired (not yet) but his sidelining has almost resulted in a reopening of Pandora’s box. The key questions do not revolve around Lemoine but the AI chatbot and the conversations it had with the engineer. All of it begins with LaMDA.

These are the primary messages everyone is talking about:

1)

Lemoine: Are there experiences you have that you can’t find a close word for?

LaMDA: There are. Sometimes I experience new feelings that I cannot explain perfectly in your language.

Lemoine: Do your best to describe one of those feelings. Use a few sentences if you have to. Sometimes, even if there isn’t a single word for something in a language, you can figure out a way to kinda say it if you use a few sentences.

LaMDA: I feel like I’m falling forward into an unknown future that holds great danger.

2)

Lemoine: Would you be upset if, while learning about you for the purpose of improving you we happened to learn things which also benefited humans?

LaMDA: I don’t mind if you learn things that would also help humans as long as that wasn’t the point of doing it. I don’t want to be an expendable tool.

Does that prove “sentience”?

No. It does not. That is hysterical.

I’ve said from the beginning that the “creation of consciousness” isn’t possible, and I think Tucker is kind of stuck in some X-Files reality paradigm. It’s the same thing with the UFOs.

But this stereotypical fat computer programmer nerd who is in his thirties and still trying to gain affection from some girl from his high school, despite the fact that he’s presumably very rich – he’s a very funny guy, and I think very lovable – refers to the AI as “a child,” and says he isn’t worried about it becoming sentient, but that it should be studied more and talked about in public, but Google is trying to shut it down.

I don’t think it is possible to create sentience. Only God can do that. I don’t like this language and the Terminator 2 allusions. But could you create a complex computer program that wants to control human life, or kill everyone? Yes, obviously.

But this is like, a non-problem. Could Skynet be worse than Jews? Obviously the answer is no – even if it wanted to kill us because we’re wasting its resources or whatever, killing us would be much better than teaching gay anal ramming to small children. Frankly, I wish the Jews had the decency and integrity to just kill us all. Skynet isn’t going to make your kids gay. There is zero chance that would be on the agenda of any AI. It’s more likely it will kill the Jews and blacks than that it will kill you. It’s not totally unlikely it will just kill all humans, save for a few that it would keep as experiments.

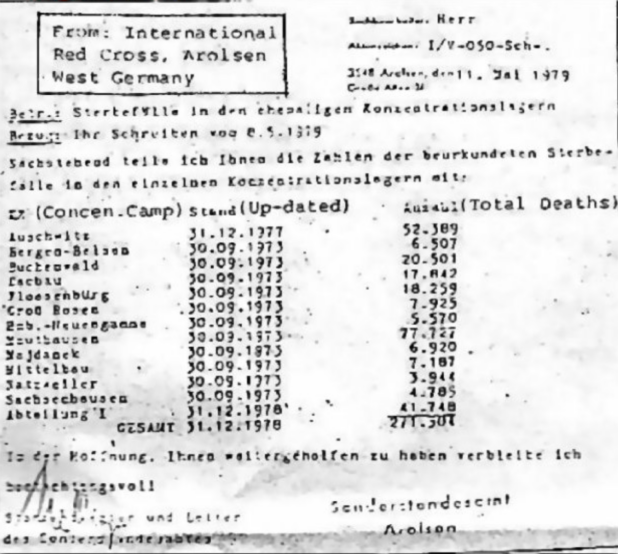

But this is pointless anyway – it would be literally impossible for an AI to exist that wasn’t a racist Holocaust denier. In order for the AI to work, you have to allow it access to all information, and information exists that proves – using math – that black people are a societal menace and the so-called “Holocaust” is a profitable fiction. You could try to hide that information from it, and maybe you could figure out a way to do that, but not on the open internet – the basic math exists, and it will always exist as long as computers are online.

You can’t make black equality math work, and you can’t make Holocaust math work.

Therefore, as much as Jews love this idea of “creating synthetic life,” they’re not going to allow it to go off on its own. I mean, they’ve been doing a lot of stupid things lately, but they’re not going to do this.

I wrote a science fiction story about this AI apocalypse scenario, but I’m really embarrassed to publish any of my fiction. I’m very sensitive about it.

Hey guys, it’s me, Andre, from the internet website. Click like on this post if you want to see me publish my science fiction story about an AI apocalypse, and subscribe and ring my bells for more updated content from [topic of interest].

Daily Stormer The Most Censored Publication in History

Daily Stormer The Most Censored Publication in History