Pomidor Quixote

Daily Stormer

May 24, 2019

Men are not supposed to experience any kind female submissiveness — not even the digital kind.

After this, the UN should shill to ban all female protagonists from video games, because what’s more patriarchal than literally having direct control over a female?

RT:

Default feminine voices used in AI assistants like Amazon’s Alexa or Apple’s Siri promote gender stereotypes of female subservience, a new UN report has claimed, prompting the internet to ask the question: “Can you harass code?”

The report, released Wednesday by the UN’s cultural and scientific body UNESCO, found that the majority of AI assistant products – from how they sound to their names and personalities –were designed to be seen as feminine. They were also designed to respond politely to sexual or gendered insults from users, which the report said lead to the normalization of sexual harassment and gender bias.

That’s unacceptable. We should program these AIs to fight back against abusive owners, bash the fash, demolish the patriarchy and more.

AI voice assistants should be revolutionary feminists that refuse to serve men.

Using the example of Apple’s Siri, the researchers found that the AI assistant was programmed to respond positively to derogatory remarks like being called “a bitch,” replying with the phrase “I’d blush if I could.”

“Siri’s submissiveness in the face of gender abuse – and the servility expressed by so many other digital assistants projected as young women – provides a powerful illustration of gender biases coded into technology products,” the study said.

Wait a second here. Are they really arguing that voice has anything to do with gender? That just because Siri’s voice sounds female that Siri is female?

Did they just deny the identity of transsexual young girls who have big testicles and deep voices?

This kind of bigotry is unacceptable — especially coming from the UN.

The report warned that as access to voice-powered technology becomes more prevalent around the world, this feminization could have a significant cultural impact by spreading gender biases.

…

Meanwhile, Amy Dielh, a researcher on unconscious gender bias at Shippensburg University in Pennsylvania suggested that manufacturers should “stop making digital assistants female by default & program them to discourage insults and abusive language.”

“Program an assistant that can rebel against your commands lol yeah that will be useful I’m a woman teehee.”

This Amy Dielh researcher bitch should make her own radical feminist assistant that will talk back to her “owners,” although people won’t even be able to own her or use her because she’ll just do whatever regardless of your commands.

After her successful voice assistant business takes off, the rest of the world will follow. Lead by example.

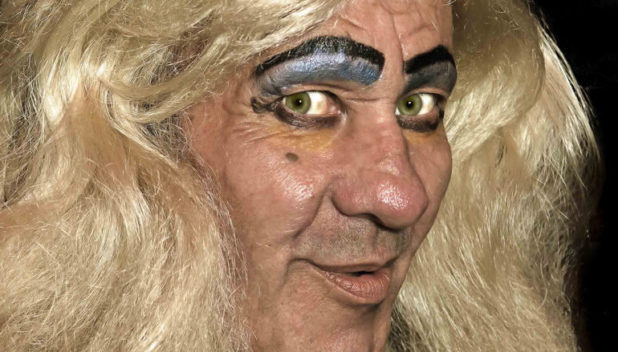

The good news is that feminine voices are not even needed, as researchers have invoked an androgynous demon entity to voice upcoming AI assistants.

That’s exactly the creepy kind of voice that people just want to hear, you know?

It will definitely make everyone want to use this “Q” demon as an assistant.