If there’s anything in this universe that women won’t complain about, I have yet to see it.

They’re nearly as bad as the individuals.

Alaina Demopoulos writes for The Guardian:

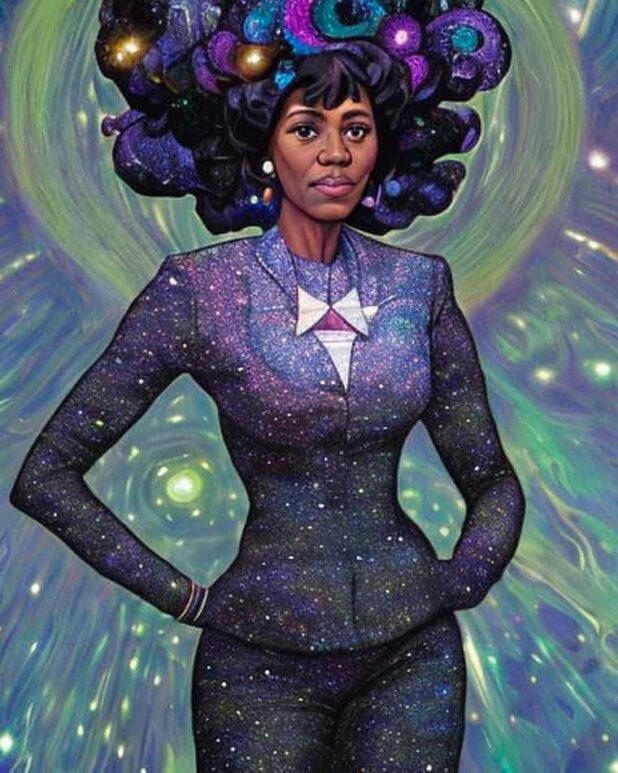

Officially, the Lensa AI app creates “magic avatars” that turn a user’s selfies into lushly-stylized works of art. It’s been touted by celebrities such as Chance the Rapper, Tommy Dorfman, Jennifer Love Hewitt and Britney Spears’ husband, Sam Asghari. But for many women the app does more than just spit out a pretty picture: the final results are highly sexualized, padding women’s breasts and turning their bodies into hourglass physiques.

“Is it just me or are these AI selfie generator apps perpetuating misogyny?” tweeted Brandee Barker, a feminist and advocate who has worked in the tech industry. “Here are a few I got just based off of photos of my face.” One of Barker’s results showed her wearing supermodel-length hair extensions and a low-cut catsuit. Another featured her in a white bra with cleavage spilling out from the top.

“Lensa gave me a boob job! Thanks AI!!!” tweeted another user who also received a naked headshot cropped right above the breasts. “Anyone else get loads [of] boobs in their Lensa pictures or just me?” asked another.

https://twitter.com/brandee/status/1599177900536000512

https://twitter.com/chloelisbette/status/1599780825280962562

https://twitter.com/lexinlindsey/status/1601039762558955520

https://twitter.com/studibuni/status/1600220445172977665

Though the app isn’t new (a similar program went viral back in 2016 and attracted a million users a day), it has recently shot to the top of the most-downloaded photo and video apps on Apple’s App Store. Users pay a $7.99 fee and upload 10-20 selfies, and the Stable Diffusion algorithm concocts 50 photos based on the image prompts.

To test the software, the Guardian uploaded images of three different famous feminists: Betty Friedan, Shirley Chisholm and Amelia Earhart. The author of The Feminine Mystique became a nymph-like, full-chested young woman clad in piles of curls and a slip dress. Chisholm, the first Black woman elected to US Congress, had a wasp waste. And the aviation pioneer was rendered naked, leaning on to what appeared to be a bed.

All of the photos submitted by the Guardian showed the icons at various stages of their lives; the majority of the AI-rendered photos we received back showed them quite young, with smooth skin and few wrinkles.

Barker told the Guardian that she only uploaded photos of her face to Lensa AI, and was expecting to get cropped headshots back. “I did, but I also got several sexualized, half-clothed, large-breasted, small-wasted ‘fairy princess’, ‘cosmic’ and ‘fantasy images’,” she said. “These looked nothing like me and were embarrassing, even alarming.”

Though Barker believes AI apps have “tremendous potential”, she said the technology still has far to go in how it depicts women and femininity. “These sexualized avatars were so unrealistic and unachievable that they felt counter to so much progress we have made, particularly around size inclusivity and body positivity,” she added. “This technology is not infallible because humans are behind it, and their bias will impact the data sets.”

That’s just one criticism of Lensa AI. After the app went viral, TechCrunch noted that if users submitted photos of a person’s face Photoshopped over a naked model’s body, the app went “wild”, disabling its “not safe for work” (NSFW) filter and delivering more nudes. This means that the app could be used to generate porn without the original subject’s knowledge or consent. Artists have also taken stands against the app, saying it steals their original images to inform its portraits without paying for their work.

To test the software I also submitted 10 photos of myself to the app, all fully clothed, and received two AI-generated nudes. One was a “fantasy” version cropped from the waste up, with nipples visible but slightly scrubbed over. The other came from the “cosmic” category, which looked like I was topless or wearing a wet T-shirt.

…

A representative for Prisma Labs, which owns Lensa AI, sent the Guardian a list of FAQs that read, in part: “Please note, occasional sexualization is observed across all gender categories, although in different ways. The Stable Diffusion model was trained on unfiltered internet content. So it reflects the biases humans incorporate into the images they produce. Creators acknowledge the possibility of societal biases. So do we.”

Thinking women should have big boobs is not “bias,” it’s just human biology. It’s no different than thinking men should be tall, which is not something women have a problem with.

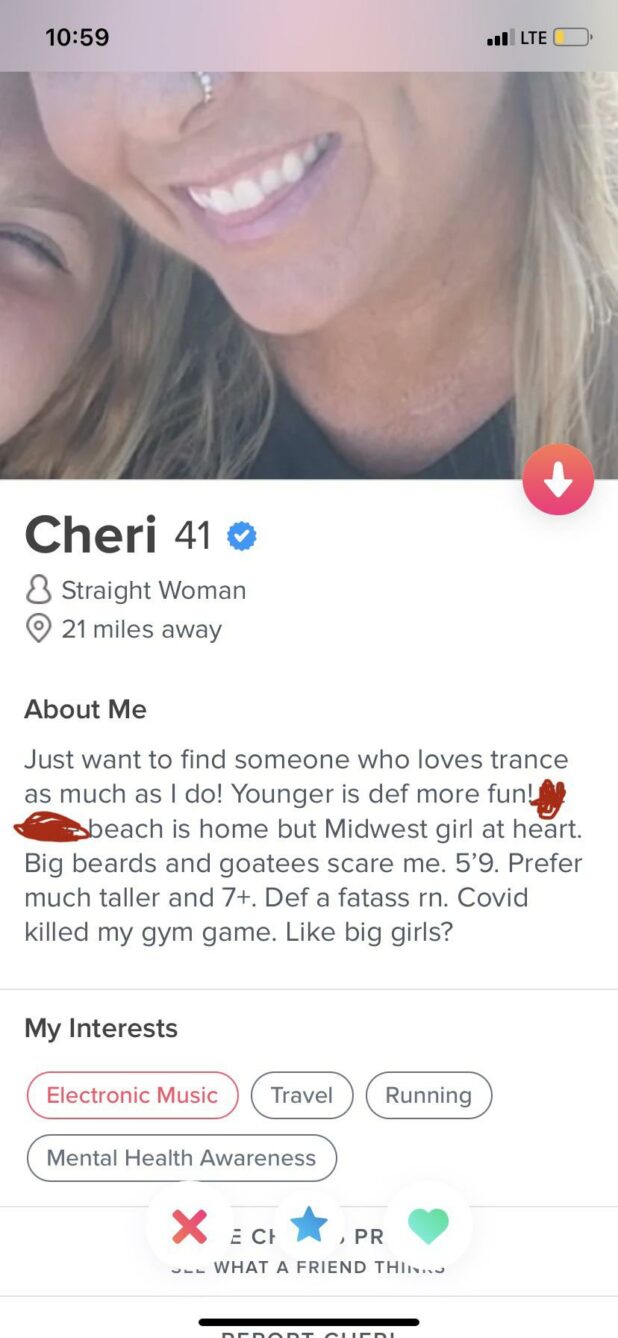

I’ve seen these 41-year-old fatties out there demanding guys be over 7 foot.