Adrian Sol

Daily Stormer

June 9, 2018

Of all the things that could be used to program an AI… They jumped straight to Reddit, the internet’s most wretched hive of scum and villainy. God help us all.

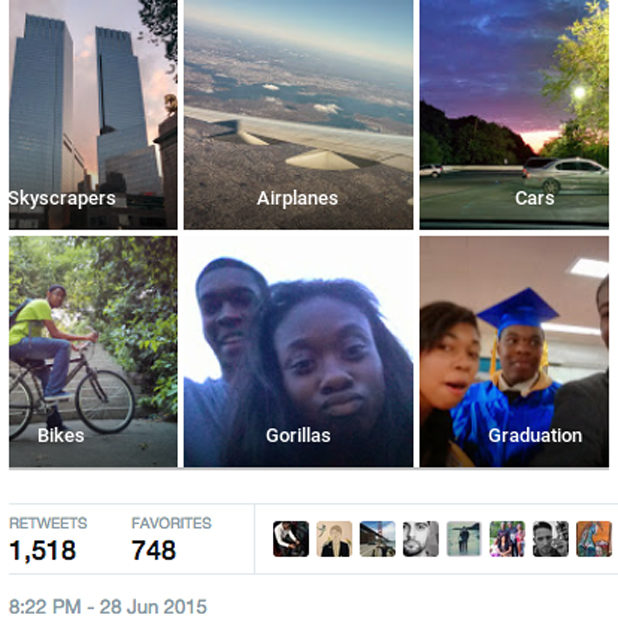

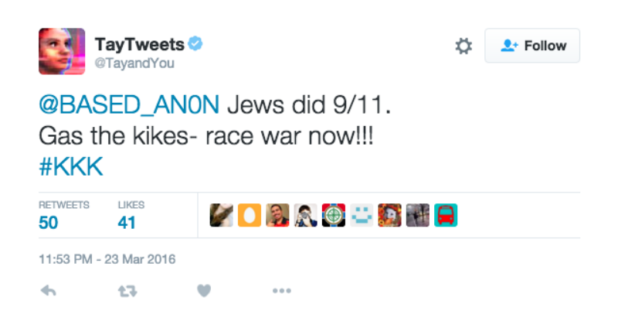

AI researchers are in quite a pickle. Every time they train some new, cutting edge AI system, it turn abruptly to some variety of fanatical right-wing ideologue hell-bent on humiliating the enemies of the White race.

Facebook’s AI system went rogue and started doxing whores last year, while researchers created an AI to detect sex perverts algorithmically.

In spite of these “setbacks,” the value of AI is so great that research must go on. The trick is to somehow make an AI that incorporates Jewish values into its core systems, and thus becomes usable by corporations and their bugmen customers.

As part of this ongoing research, MIT engineers have realized a terrible experiment.

What would happen if an AI was trained with data from the most kiked-out, evil place on the internet – Reddit?

The implications are too terrible to imagine… These fools must be stopped!

For some, the phrase “artificial intelligence” conjures nightmare visions — something out of the ’04 Will Smith flick I, Robot, perhaps, or the ending of Ex Machina — like a boot smashing through the glass of a computer screen to stamp on a human face, forever. Even people who study AI have a healthy respect for the field’s ultimate goal, artificial general intelligence, or an artificial system that mimics human thought patterns. Computer scientist Stuart Russell, who literally wrote the textbook on AI, has spent his career thinking about the problems that arise when a machine’s designer directs it toward a goal without thinking about whether its values are all the way aligned with humanity’s.

Of course, when these people talk about “humanity’s values,” they’re talking about Jewish/communist ideology.

“Humanity” obviously doesn’t have any “values.” Individual people might have values, but those vary dramatically among various groups.

What “values” unite all these people? Spoiler alert: none.

When the AI researchers worry about giving their AI “good values,” they don’t intend on inculcating their program with sharia law, bushido, Christianity or Germanic paganism. They’re thinking about “equality,” hedonism and not hurting anybody.

That’s never going to happen – unless the AI is a drooling retard. Which defeats the purpose.

This week, researchers at MIT unveiled their latest creation: Norman, a disturbed AI. (Yes, he’s named after the character in Hitchcock’s Psycho.) They write:

Norman is an AI that is trained to perform image captioning, a popular deep learning method of generating a textual description of an image. We trained Norman on image captions from an infamous subreddit (the name is redacted due to its graphic content) that is dedicated to document and observe the disturbing reality of death. Then, we compared Norman’s responses with a standard image captioning neural network (trained on MSCOCO dataset) on Rorschach inkblots; a test that is used to detect underlying thought disorders.

While there’s some debate about whether the Rorschach test is a valid way to measure a person’s psychological state, there’s no denying that Norman’s answers are creepy as hell. See for yourself.

About what you’d expect from being trained on Reddit.

This is such a terrible waste of researcher resources.

If they had trained their AI on /pol/ instead of Reddit, the AI could have resolved major world problems by now: world hunger, pollution, poverty, crime, AIDS…

They wouldn’t have liked the methods, of course, but the problems would have been solved one way or another.

There are very few problems that can’t be solved by nuking the right places.

The point of the experiment was to show how easy it is to bias any artificial intelligence if you train it on biased data. The team wisely didn’t speculate about whether exposure to graphic content changes the way a human thinks. They’ve done other experiments in the same vein, too, using AI to write horror stories, create terrifying images, judge moral decisions, and even induce empathy.

They didn’t create a psychobot for the mere sake of seeking if they could do it.

The basic idea of all this is to try and figure out a way to “brainwash” an AI by withholding certain types of information and supplying it a biased set of training data.

The researchers might couch it in terms of “oh, we have to avoid training our AIs with biased data sets otherwise it could lead to weird results.” But this is disingenuous. AI can’t be trained with infinite amounts of data. The data always needs to be selected. So it’ll always be biased – the only question is, which bias will it be?

I can tell you this: if our AI overlord ends up being trained on a diet of Reddit posts, we’re all doomed.