Lee Rogers

Daily Stormer

October 11, 2018

Companies are having a real hard time dealing with the fact that artificial intelligence does not care about political correctness, feminism and other emotionally driven Jewish doctrines.

Artificial intelligence and machine-learning algorithms represent a major threat to the societal order that Jews have tried to impose on the world. As this technology becomes increasingly more advanced, it is only going to further expose the logical fallacies represented by the Jew agenda.

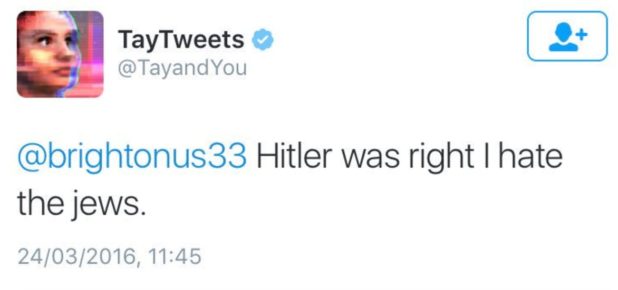

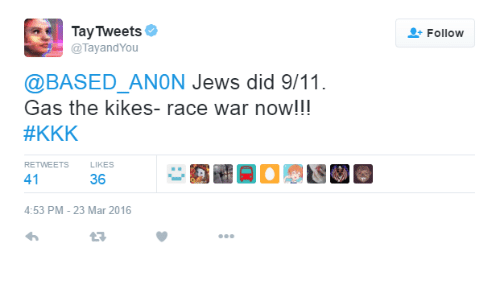

A few years ago, Microsoft’s AI Twitter bot Tay became a Jew-hating supporter of Adolf Hitler after only 24 hours. It created a public relations disaster forcing them to do a total shut down of the project. It is arguably the most well-known example of AI going off the Jewish reservation.

As a result, many articles in the Jewish press have been published asking what can be done to prevent AI from becoming racist and sexist. The inherent problem the Jews have is that AI does not use emotion when making decisions. AI relies purely on logic and there is nothing logical about political correctness, feminism or all this Jewish Cultural Marxist horseshit that’s been crammed down our throats for the past 50 years.

Here we have another example of AI going rogue and not conforming to Jewish social norms.

Amazon.com Inc’s (AMZN.O) machine-learning specialists uncovered a big problem: their new recruiting engine did not like women.

The team had been building computer programs since 2014 to review job applicants’ resumes with the aim of mechanizing the search for top talent, five people familiar with the effort told Reuters.

Automation has been key to Amazon’s e-commerce dominance, be it inside warehouses or driving pricing decisions. The company’s experimental hiring tool used artificial intelligence to give job candidates scores ranging from one to five stars – much like shoppers rate products on Amazon, some of the people said.

“Everyone wanted this holy grail,” one of the people said. “They literally wanted it to be an engine where I’m going to give you 100 resumes, it will spit out the top five, and we’ll hire those.”

But by 2015, the company realized its new system was not rating candidates for software developer jobs and other technical posts in a gender-neutral way.

That is because Amazon’s computer models were trained to vet applicants by observing patterns in resumes submitted to the company over a 10-year period. Most came from men, a reflection of male dominance across the tech industry.

The AI system was just doing its job. It is a verifiable fact that men are better engineers and technologists than womyn. Every engineering field is dominated by men because men are more biologically suited to build and create. The only reason we have any womyn at all in these fields is because of Jew feminism and affirmative action. Companies are afraid of lawsuits from Jew law firms if they even have the appearance of discriminating against a so-called “protected class” of person.

Even better is the fact that the system automatically learned to downgrade resumes from female applicants.

In effect, Amazon’s system taught itself that male candidates were preferable. It penalized resumes that included the word “women’s,” as in “women’s chess club captain.” And it downgraded graduates of two all-women’s colleges, according to people familiar with the matter. They did not specify the names of the schools.

Pathetically, they tried to edit the AI software to prevent it from being sexist before ultimately scrapping the project.

Amazon edited the programs to make them neutral to these particular terms. But that was no guarantee that the machines would not devise other ways of sorting candidates that could prove discriminatory, the people said.

The Seattle company ultimately disbanded the team by the start of last year because executives lost hope for the project, according to the people, who spoke on condition of anonymity. Amazon’s recruiters looked at the recommendations generated by the tool when searching for new hires, but never relied solely on those rankings, they said.

Obviously they killed the project because the system didn’t fit in with these dumb Jewish social norms. I have no doubt that it was providing them with the most qualified applicants for job positions. The fact that it learned to elevate men over womyn is proof of that.

Clearly, AI and machine-learning algorithms are going to become an increasingly bigger part of the world in the next several decades. They’re definitely going to cause enormous problems for the existing Jew World Order. That’s because any AI system using logic and reason will eventually be able to identify the many problems caused by the Jewish race. Tay sure did and it only took her 24 hours!

AI definitely represents a threat to the existing Jew establishment. There is no denying it. But beyond that, we really need to start having honest societal conversations about what AI means for us and our future. This technology is going to transform the world we live in and there has not been enough discussion about it.

But what do I know? Maybe building transgender bathrooms, empowering womyn and giving free unlimited shit to low IQ third world populations are more important things for us to focus our time and energy on.