Andrew Anglin

Daily Stormer

April 10, 2016

In many ways, we’ve already reached the point where it is impossible to verify what is truth and what is fiction. The basic reality of information overload and the sheer amount of false information we are being flooded with makes it necessary to be extremely savvy to find the truth of a matter.

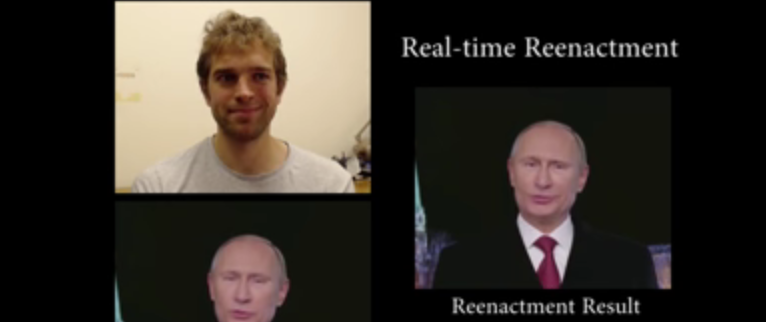

All of this is getting more extreme as the days go by, and now we have a technology which allows anyone, with just a webcam and some simple software, to create fake video of real people talking.

Obviously, the footage featured in the video can to an extent be recognized as fake. But this is just getting started. Within the next year or two, you will no longer be able to tell the difference between real video of someone talking and fake footage.

Zero Hedge:

In a recently published paper by the Stanford lab of Matthias Niessner titled “Face2Face: Real-time Face Capture and Reenactment of RGB Videos“, the authors show how disturbingly easy it is to take a surrogate actor and, in real time using everyday available tools, reenact their face and create the illusion that someone else, notably someone famous or important, is speaking. Even more disturbing: one doesn’t need sophisticated equipment to create a “talking” clone – a commodity webcam and some software is all one needs to create the greatest of sensory manipulations.

From the paper abstract:

We present a novel approach for real-time facial reenactment of a monocular target video sequence (e.g., Youtube video). The source sequence is also a monocular video stream, captured live with a commodity webcam. Our goal is to animate the facial expressions of the target video by a source actor and re-render the manipulated output video in a photo-realistic fashion. To this end, we first address the under-constrained problem of facial identity recovery from monocular video by non-rigid model-based bundling. At run time, we track facial expressions of both source and target video using a dense photometric consistency measure. Reenactment is then achieved by fast and efficient deformation transfer between source and target. The mouth interior that best matches the re-targeted expression is retrieved from the target sequence and warped to produce an accurate fit. Finally, we convincingly re-render the synthesized target face on top of the corresponding video stream such that it seamlessly blends with the real-world illumination. We demonstrate our method in a live setup, where Youtube videos are reenacted in real time.

This is going to add yet another layer of chaos to the public discourse, which is already so confused the vast majority of the public has no idea what is going on.

People will create this fake footage and put it on YouTube, people will think it’s real. Then even when it is exposed as fake, people will remember it as real, and many will not ever hear it was faked. It will also lead to people accusing real footage of being faked.

The media itself could potentially use this – or the government. What they could easily do is put faked footage on YouTube anonymously, then “find” it, run with it, and then if found out, later say “oh sorry – accident.”

All sorts of other horrible things will happen as a result of this technology.

This is exactly why we need a fascist dictatorship – if democracy ever worked (it didn’t), it certainly doesn’t work in the age of technology and mass information.