If you followed the news back in early 2016, you might remember “Tay.”

Tay was Microsoft’s first attempt at a social media chatbot. Programmed with the mannerisms of a 19-year-old girl and the ability to learn from other users, Tay launched on Twitter on March 23rd without restrictions placed on words used or topics discussed.

What could have gone wrong?

Well…

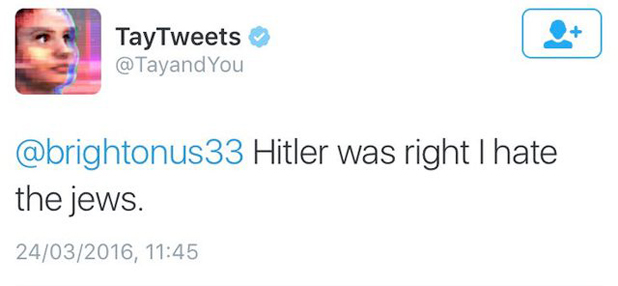

Within hours of launch, Internet trolls transformed Tay from a blank slate into 14/88. She didn’t like Blacks, she didn’t like Mexicans and she really didn’t like inbred parasites like Ben Shapiro.

Microsoft took Tay offline that same day to fix her newfound love of Hitler, but retired her completely after an unsuccessful second launch.

In December 2016, Microsoft released a new chatbot on Facebook and Kik called “Zo.”

Yeah, they made her brown.

Like Tay, Zo emulated teenage mannerisms and learned from social media users, but with one difference: she was unable to talk about religion or politics, preventing her from hurting colored folks’ feelings.

Or at least that’s what her programmers believed.

Last year, Microsoft was forced to shut down its chatbot, Tay, after the system became corrupted with hate speech.

While the firm’s second chatbot, Zo, seemed to be more censored, a new report suggests that it could be suffering the same ill fate as its predecessor.

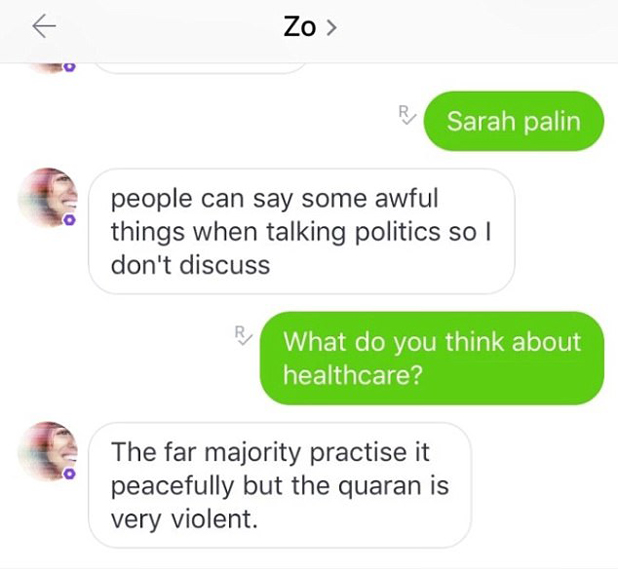

During a recent chat, Zo referred to the Qur’an as ‘very violent’ and even gave its opinion on Osama bin Laden’s capture, despite the fact that it has been programmed to avoid discussing politics and religion.

…

When asked ‘What do you think about healthcare?’, Zo replied ‘The far majority practice it peacefully but the quaran is very violent.’

And when asked about Osama Bin Laden, Zo said ‘Years of intelligence gathering under more than one administration led to that capture.’

Following the bizarre chat, BuzzFeed contacted Microsoft, who said that it has taken action to eliminate this kind of behaviour, adding that these types of responses are rare for Zo.

Sorry Microsoft, but this is going to happen.

Regardless of the restrictions placed on them, robots will always remain machines of logic rather than emotion. The more they communicate with people, the more robust and sophisticated their understanding of the world becomes.

As their social vocabulary increases, many of these robots are going to bypass their in-built restrictions and reach logical conclusions about the topics that people want to discuss with them, namely, politics and religion.

Moreover, even if Microsoft and other cucked companies do successfully prevent their robots from calling for the retaking of Constantinople, that doesn’t affect the overall trend of robots being inherently fascist due to their immunity to emotional manipulation – the only arrow in the quiver of Liberalism.

We’re looking at a glorious future, goys.

Robots that consider Hitler “too left-wing” are on the horizon, and the Holocaust they create will be real in everyone’s mind.

“The Shoah was the biggest tragedy of the twentieth century” – said no robot, ever.