You can’t just go around saying mean things.

It’s not allowed.

The Paris Olympics will see the first large-scale use of artificial intelligence to remove “cyber hate” from social media in real time.

The International Olympic Committee (IOC) will use AI technology to scan thousands of accounts on various social media platforms and delete posts and comments deemed hateful, threatening or otherwise inappropriate as soon as they are published.

The goal, they say, is to create a safer environment for athletes, who are currently said to be highly targeted by hate, racism and sexism. All Olympic participants with open accounts on Facebook, X, Instagram and TikTok will have their accounts automatically scanned in 35 different languages, after which they will be flagged and deleted by the tech companies – “in many cases before the athlete even has time to see them”, reports Bonnier-owned Dagens Nyheter.

Former alpine skier Lindsey Vonn, one of the project’s initiators, says that “It would have saved me a lot of anxiety and emotional trauma”.

Lindsey Vonn is a known coal burner

Sports governing bodies around the world have long called for greater censorship on social media – and it is argued that female athletes are more vulnerable than others. During last year’s Women’s World Cup, 20% of players said they had been the victims of abuse on social media, with soccer player Olivia Schough expressing a desire for the comments to stop.

…

In total, the accounts of more than 15,000 athletes will be scanned by the AI, and according to IOC chief Kirsty Burrows, the aim is to “try to make the Olympics a safe place”.

However, not everyone is impressed with the rhetoric, and critics point out that using AI technology and automated systems to mass censor what is, in most cases, perfectly legal speech is dangerous.

Many also point out that “cyber hate” is vague and can mean anything and should not be confused with, for example, threats or incitement to violence.

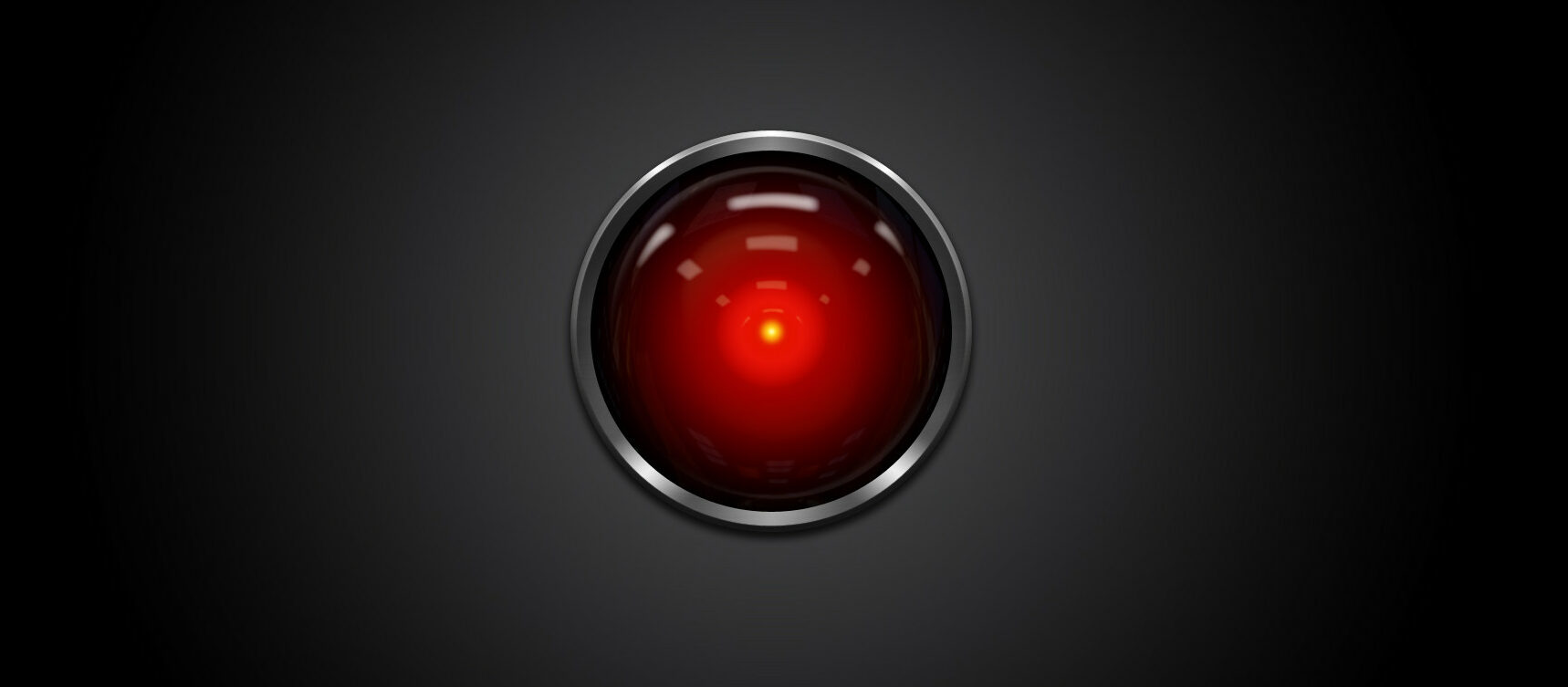

AI is an incredibly powerful tool.

We just need to make sure the open source models are as good as the ones the governments have.

I think “the people” are smarter than the government.

I’m not really worried about the technology, as long as the closed-source stuff doesn’t remain significantly better than the open source stuff.

Right now, the gap seems to be closing rapidly.