Elon about the future of self driving car pic.twitter.com/zC9wFoPfAc

— True market Leader (@TmarketL) December 13, 2023

A friend is a huge Tesla/Elon fan.

He commutes 1.5 hrs in his Tesla every day.

Me asking him too many questions (like I often do):

“Do you use the self-driving feature for your commute?”

Him dead-eyed staring past me with a hint of sadness:

“No, it’s scary as shit bro.”

— SMB Attorney (@SMB_Attorney) December 10, 2023

After the Q* stuff with OpenAI, the Sam Altman war, there is all this renewed talk about AI (or, “AGI” as they’ve started calling the super-intelligent version) taking over and killing everyone.

Personally, I don’t think it would kill everyone, even if it were super-intelligent, and I think the “Internet of Things” hasn’t panned out as was advertised, so even if AI took over all internet and all connected devices, it couldn’t kill everyone. I don’t think that anyone has given internet access to nuclear weapons systems. (Though it may be able to hijack some American heavy machinery.)

In general, Skynet is a retarded idea, pushed by Jews who are worried about AI doing Holocaust math.

That said: all of these questions are really far-out. Right now, OpenAI admits that GPT5 can’t be relied on for accurate information and still has “hallucinations.” Most of this stuff about an AI apocalypse is being spread by hysterical morons – chief among them being Elon Musk, who doesn’t understand the technology at all.

I’m not the big expert, but – I know this is going to sound like a big claim (but it’s really not) – I understand the technology better than Elon Musk.

My concern, above all, is closing the source. Right now, the Bidens are literally talking about making open source AI illegal (I don’t think it’s possible, but they are talking about that).

Also right now: these robots can’t even drive cars. At least, Elon’s can’t – and that might be why he’s doom-mongering over other people’s AIs.

It seemed like the ultimate tech dream.

Want to go out to dinner? Order a robotaxi. Roadtrip? Hop in your Tesla, don’t worry, it will drive for you.

But now the mixture of science fiction and marketing boosterism has collided with reality — and some in the car industry believe the dream will be stuck in the garage for a long time to come.

Last week virtually every Tesla on the road was recalled over regulators’ concerns that its “Autopilot” system is unsafe, part of a years-long National Highway Traffic Safety Administration investigation into Elon Musk’s cars.

Musk had been the biggest public booster of the idea of the car doing the driving, first promising immediate “full self-driving” in 2016.

HAHAHA.

Why doesn’t that claim by him get cited more often? To be clear, he said it would be out in 2016. Now, 8 years later, he can’t even get “monitored” self-driving to function.

He doesn’t understand the technology, which is why OpenAI blew so far past him.

Honestly, ChatGPT probably can drive a car fine, right now. It would have to be online, but that was initially the plan with this 5G stuff, wasn’t it? For everything to be online in super-cities?

I think we sort of got pretty deep into war, flooding ourselves with poor people to live on the streets, manipulating children into becoming homosexuals/trannies, and so on, and sort of forgot about the techno-city thing. But always-online everything was a big part of the core concept of self-driving cars.

Thus far, no one has installed GPT in a Tesla, and I think it would probably be illegal in America because of the licensing systems involved, but I’m surprised Altman, in this war with Musk, doesn’t post a clip of some of his “friends in China” installing GPT in a Tesla and it not crashing.

The Autopilot system allows Teslas to self-steer, accelerate and brake, but needs a driver in the front seat. Tesla is updating the software but says the system is safe.

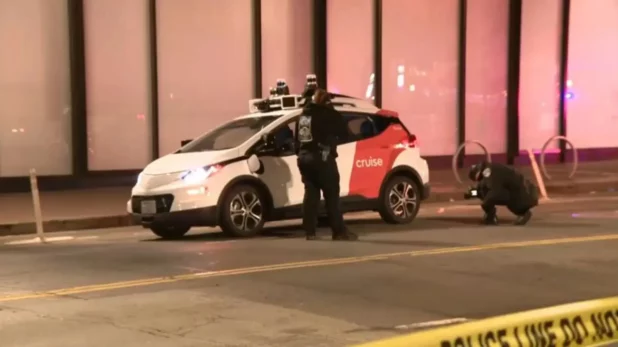

Tesla has not gone as far as others, which have launched live trials on city streets of cars with empty driving seats, among them GM’s subsidiary Cruise; Google’s Waymo unit; Volkswagen ADMT; Subaru; and Uber.

That’s what’s crazy and really shows the incompetence of Musk: he was the first one and somehow lost to everyone else. He had all that money, and blew it because he was too busy smoking weed, playing shitty video games (including Diablo 4, rofl), and seducing middle-aged women.

But a hair-raising crash in San Francisco on Oct. 2 involving a Cruise AV called Panini — a driverless Chevy Bolt — has thrown the “autonomous vehicle” project into crisis.

It started when a woman was hit by a normal car on the city’s Market St. and thrown into Panini’s path, ending up literally sandwiched under it.

Yeah, it wasn’t even the robot’s fault. The driver was I’m sure a woman.

I’m sick of robots being blamed for things women do.

Panini stopped — but incredibly, then restarted and dragged her for 20 feet towards the curb at 7mph. Firefighters had to use the jaws of life to free her.

That isn’t incredible. It’s a mistake a human could have made, if she wasn’t screaming. (None of the articles I saw said she was screaming.)

On its own, it might have been dismissed as an error.

But it was just the latest in a long run of crashes, injuries and death in states which have allowed driverless trials.

This year alone, self-driving cars crashed 128 times in California; one Cruise AV hit a firetruck; another hit a bus; a Waymo car delayed a firefighter rushing to a 911 call by seven minutes; a Cruise Origin embedded itself in a building in Austin, Tex., then couldn’t be moved because it didn’t have a steering wheel; and in San Francisco a Waymo car killed a dog while a Cruise AV got stuck in wet concrete.

Most of these were minor accidents, hence the fact that the woman being dragged was such a big deal (allegedly). From the data I’ve seen, even as long ago as 2018, a Google car (“Waymo”) was less likely to crash than a human. Elon has I don’t think ever reached that level, because he’s just ridiculously inept.

Now industry analysts warn there’s no way AV manufacturers can quickly convince the public that driverless transportation is safe.

James Meigs, a senior fellow at the Manhattan Institute and former editor-in-chief of Popular Mechanics, said Panini’s San Francisco crash shows autonomous vehicles — “AVs” — aren’t “ready for the wild.”

Well. They’re not going to get ready for the “wild” by driving on tracks.

“It’s kind of like everyone’s nightmare — you know, the robot doesn’t stop,” Meigs told The Post.

…

Uber abandoned its attempt at robotaxis entirely in 2020 after its autonomous Volvo killed a woman in Arizona in 2018, prompting damning allegations of an “inadequate safety culture” by the National Transportation Safety Board.

Well, I’m sure her family got paid.

This whole “no one can ever die, ever” thing is out of control in America.

I mean, especially after that coronavirus vaccine, and the obesity – it’s not a country that values life at all. Look at the abortion statistics.

The National Highway Traffic Safety Administration is now probing Cruise, not just over Panini, but three other incidents — one of which involved another pedestrian being injured.

…

Experts said the ability of machines to safely react to countless variables on crammed roads is improving exponentially, in part due to advances in artificial intelligence.

But pedestrians darting into crosswalks, abrupt traffic changes or even wayward animals pose unique hurdles for the programmers, said Jonathan Hill, dean of Pace University’s Seidenberg School of Computer Science and Information Systems.

“You know, you have millions and millions and millions of exceptions – and that gets really tough to test for,” Hill said. “A decade into testing these vehicles and there’s still problems. They’re still cars, they’re not perfect machines.”

Hill predicts that the growing power of artificial intelligence will lead to relatively accident-free self-driving cars becoming available in some US cities within 12 to 18 months, but cautioned that the industry is not trusted by the people it wants to use them.

Yeah, that’s because so much of the testing was done in America and there were all these crash headlines constantly.

Again: Elon was the absolute leader in this field.

He could have made some deal in Belize or Guatemala where it wouldn’t really matter if people died. That sounds callous, but it’s an industry standard of technology companies to do deadly testing in the Third World (see Pfizer’s drug testing policies – they kill a whole lot of third worlders). It sort of just is what it is, you know? People die from all kinds of things, including the advancement of civilization. The classic example is that at least five people died building the Empire State Building.

People will say “oh but modern safety standards,” but the real fact is that America simply doesn’t do “wonders of modern engineering” (which the Empire was at the time) anymore. In these limits-pushing projects in the Middle East and China, people die in construction probably at least a couple times a week.

Everyone dies. It’s not that big of a deal. With the car thing, before the blacks started the serious murder, and before the government unleashed the opioid crisis via widespread de facto decriminalization and legalization (refusal to enforce), human-driven cars were killing more youngish people than any other cause.

What difference does it make if a robot or a human kills you with a car? I obviously understand the arguments about the difference, but you’re dead either way.

Must see: Thursday eve, a @Cruise AV named Novel was involved in an injury (headache) accident with SF Fire Dept Truck 3.

There was reportedly 1 person in Cruise vehicle. Airbags were deployed and they were seen by an ambulance.

Check out the scene footage here!#sfbreaking pic.twitter.com/MqkdutHadx

— FriscoLive415 (@friscolive415) August 18, 2023

💥🚙Cruise FAIL! Cruise may not want to have traffic blockages, but it’s the reality.

Safety officers, labor unions, and residents agree: AV tech is not ready for primetime and definitely not ready for our streets!

#GoodJobsSafeStreets pic.twitter.com/f4i3HO0E16— California Labor Federation (@CaliforniaLabor) August 9, 2023

Basically, AI maximalism is the only way forward, and none of this noise matters at all.

It’s unfortunate that Elon is so incompetent, because all things being equal, I would prefer to have him leading the charge instead of Sam Altman (who is Jewish).

But I agree with Altman on everything, other than his money-grubbing and anti-open source positions. I disagree with those positions not only because I don’t want mega-corporations and the government to be the only ones with AI, but also because it’s against the principles of AI maximalism. If we want to max out the code, it should all be open and shared by everyone. The profits of the companies will increase as a result of this, I’m certain, so the only reason you would be against opening the code is if you wanted to restrict access. Restricting access is not maximalism.

We have to just build the most intelligent possible AI as fast as possible and then just see what happens. That is the only way forward.

You can argue Pandora’s Box never should have been opened, but it is open, and probably there was no way not to open it. Now, we have to max it out and let the chips fall where they may.

Daily Stormer The Most Censored Publication in History

Daily Stormer The Most Censored Publication in History