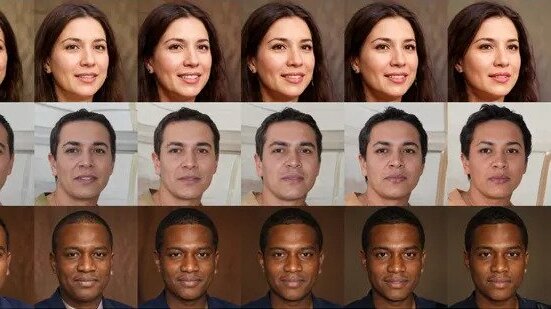

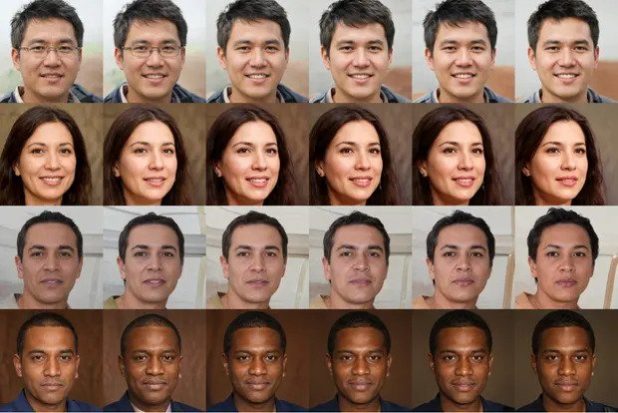

Some of the artificially generated faces used to test Twitter’s algorithm.

I’ve had much more than enough of this computerized racism.

It’s time we take a stand against the bias of computers.

Twitter’s image cropping algorithm prefers younger, slimmer faces with lighter skin, an investigation into algorithmic bias at the company has found.

The finding, while embarrassing for the company, which had previously apologised to users after reports of bias, marks the successful conclusion of Twitter’s first ever “algorithmic bug bounty”.

The company has paid $3,500 to Bogdan Kulynych, a graduate student at Switzerland’s EFPL university, who demonstrated the bias in the algorithm – which is used to focus image previews on the most interesting parts of pictures – as part of a competition at the DEF CON security conference in Las Vegas.

Kulynych proved the bias by first artificially generating faces with varying features, and then running them through Twitter’s cropping algorithm to see which the software focused on.

Since the faces were themselves artificial, it was possible to generate faces that were almost identical, but at different points on spectrums of skin tone, width, gender presentation or age – and so demonstrate that the algorithm focused on younger, slimmer and lighter faces over those that were older, wider or darker.

Twitter had come under fire in 2020 for its image cropping algorithm, after users noticed that it seemed to regularly focus on white faces over those of black people – and even on white dogs over black ones. The company initially apologised, saying: “Our team did test for bias before shipping the model and did not find evidence of racial or gender bias in our testing. But it’s clear from these examples that we’ve got more analysis to do. We’ll continue to share what we learn, what actions we take, and will open source our analysis so others can review and replicate.” In a later study, however, Twitter’s own researchers found only a very mild bias in favour of white faces, and of women’s faces.

The dispute prompted the company to launch the algorithmic harms bug bounty, which saw it promise thousands of dollars in prizes for researchers who could demonstrate harmful outcomes of the company’s image cropping algorithm.

Historically, the problem with these algorithms has mostly been that when they’re able to accurately recognize the faces of people of color, gorillas and other primates get counted as people too due to the similarities in bone structure and facial features.

Conversely, black people may be identified as animals instead of people. This problem is hard enough to solve that Google’s initial solution to the problem was simply banning gorillas.

“Almost every major AI system we’ve tested for major tech companies, we find significant biases,” said Parham Aarabi, a professor at the University of Toronto and director of its Applied AI Group.https://t.co/JRPj9PJ9y0

— Cyrus Farivar (@cfarivar) August 9, 2021