In order for democracy to function, all information consumed by the public has to be officially approved by the government.

Without total information control, democracy, the perfect system of government, collapses.

People think that just because democracy is perfect it must be strong. But no. It is so weak that it can be destroyed by news articles on people’s phones.

We cannot lose our democracy. We will lose our child trannies, we will lose our windmills, we will lose our open borders, we will lose the war in the Ukraine.

We have to shut down all information not explicitly approved by the government.

The AI election is here.

Already this year, a robocall generated using artificial intelligence targeted New Hampshire voters in the January primary, purporting to be President Joe Biden and telling them to stay home in what officials said could be the first attempt at using AI to interfere with a US election. The “deepfake” calls were linked to two Texas companies, Life Corporation and Lingo Telecom.

It’s not clear if the deepfake calls actually prevented voters from turning out, but that doesn’t really matter, said Lisa Gilbert, executive vice-president of Public Citizen, a group that’s been pushing for federal and state regulation of AI’s use in politics.

“I don’t think we need to wait to see how many people got deceived to understand that that was the point,” Gilbert said.

Examples of what could be ahead for the US are happening all over the world. In Slovakia, fake audio recordings might have swayed an election in what serves as a “frightening harbinger of the sort of interference the United States will likely experience during the 2024 presidential election”, CNN reported. In Indonesia, an AI-generated avatar of a military commander helped rebrand the country’s defense minister as a “chubby-cheeked” man who “makes Korean-style finger hearts and cradles his beloved cat, Bobby, to the delight of Gen Z voters”, Reuters reported. In India, AI versions of dead politicians have been brought back to compliment elected officials, according to Al Jazeera.

But US regulations aren’t ready for the boom in fast-paced AI technology and how it could influence voters. Soon after the fake call in New Hampshire, the Federal Communications Commission announced a ban on robocalls that use AI audio. The agency has yet to put rules in place to govern the use of AI in political ads, though states are moving quickly to fill the gap in regulation.

The US House launched a bipartisan taskforce on 20 February that will research ways AI could be regulated and issue a report with recommendations. But with partisan gridlock ruling Congress, and US regulation trailing the pace of AI’s rapid advance, it’s unclear what, if anything, could be in place in time for this year’s elections.

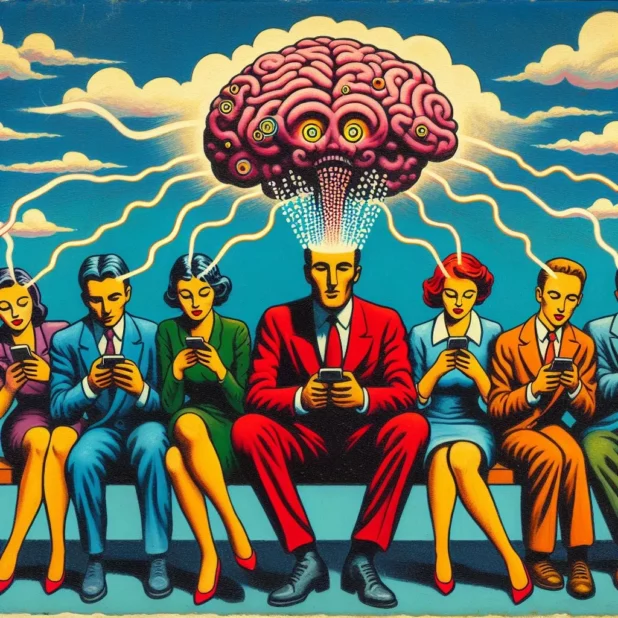

Without clear safeguards, the impact of AI on the election might come down to what voters can discern as real and not real. AI – in the form of text, bots, audio, photo or video – can be used to make it look like candidates are saying or doing things they didn’t do, either to damage their reputations or mislead voters. It can be used to beef up disinformation campaigns, making imagery that looks real enough to create confusion for voters.

…

Perhaps most concerning, though, is that the advent of AI can make people question whether anything they’re seeing is real or not, introducing a heavy dose of doubt at a time when the technologies themselves are still learning how to best mimic reality.

“There’s a difference between what AI might do and what AI is actually doing,” said Katie Harbath, who formerly worked in policy at Facebook and now writes about the intersection between technology and democracy. People will start to wonder, she said, “what if AI could do all this? Then maybe I shouldn’t be trusting everything that I’m seeing.”

If mass censorship is offensive to you, you are a fascist and you should move to Russia or China.

In democracy, which is a utopian system, we don’t allow people to just go around saying things.

Saying things leads to total destruction.

First the federal requirements.

If you are training a large model (generally anything over 10b parameters), you must report to the government re: “any ongoing or planned activities related to training, developing, or producing [your] model.” https://t.co/82MxmBC8zV pic.twitter.com/NSuUcSOrIL

— Jeff Amico (@_jamico) February 23, 2024

If you train your model using a US-based centralized cloud, the cloud must first verify your identity through a new KYC program, collecting personal info including home address, bank info, IP addresses and the planned details of your training run, before opening your account.… pic.twitter.com/JYjHmtiM1Z

— Jeff Amico (@_jamico) February 23, 2024

If you are a California resident, and are training a large model (anything using more than 10^26 FLOPs), you must assess whether the model will possess a “hazardous capability” before you can begin training. This certification is made under penalty of perjury.… pic.twitter.com/TKfk1MHCg2

— Jeff Amico (@_jamico) February 23, 2024

If (under penalty of perjury) you’re not willing or able to make this certification before training begins, you must comply with a nine-part regulatory regime including:

1. Safeguards to ensure the model weights are not disclosed

2. The capability to promptly enact a “full… pic.twitter.com/6W9mYfR0m3— Jeff Amico (@_jamico) February 23, 2024

After the model is released, you must implement safeguards to “prevent an individual from being able to use the model to create a derivative model that was used to cause a critical harm.” You must also periodically reevaluate your safety procedures described above to ensure… pic.twitter.com/HsxsLb5VWf

— Jeff Amico (@_jamico) February 23, 2024

So where does this all end up?

Startups will struggle to meet the burden of these new laws, most of which are impossible for small teams to comply with.

Their counterparties (including centralized clouds) will find it more difficult to serve them, given the new reporting…

— Jeff Amico (@_jamico) February 23, 2024