Most of these impending doom technologies are less about doing whatever they say they’re supposed to do, and more about controlling people’s lives.

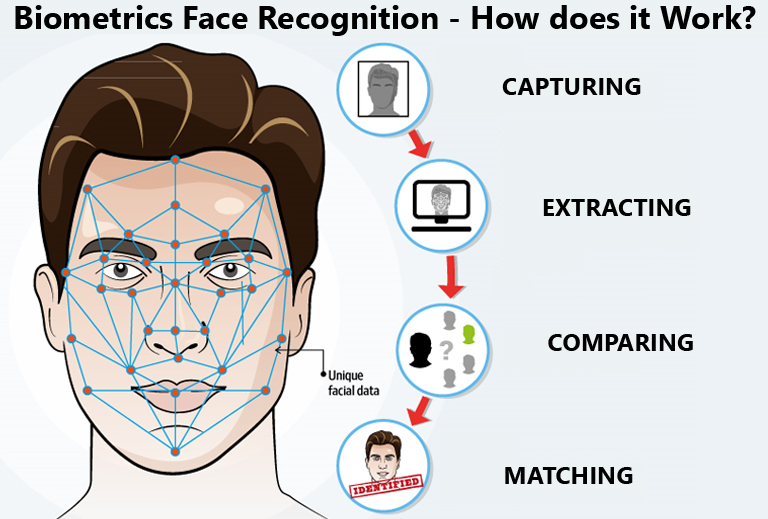

Biometric facial recognition is supposed to be about preventing fraud, but it turns out it’s really easy to commit fraud against it.

Facial-recognition systems, long touted as a quick and dependable way to identify everyone from employees to hotel guests, are in the crosshairs of fraudsters. For years, researchers have warned about the technology’s vulnerabilities, but recent schemes have confirmed their fears—and underscored the difficult but necessary task of improving the systems.

In the past year, thousands of people in the U.S. have tried to trick facial identification verification to fraudulently claim unemployment benefits from state workforce agencies, according to identity verification firm ID.me Inc. The company, which uses facial-recognition software to help verify individuals on behalf of 26 U.S. states, says that between June 2020 and January 2021 it found more than 80,000 attempts to fool the selfie step in government ID matchups among the agencies it worked with. That included people wearing special masks, using deepfakes—lifelike images generated by AI—or holding up images or videos of other people, says ID.me Chief Executive Blake Hall.

“Thousands of people have used masks and dummies like these to try and trick facial identification verification, according to ID.me,” The Wall Street Journal reports.

Facial recognition for one-to-one identification has become one of the most widely used applications of artificial intelligence, allowing people to make payments via their phones, walk through passport checking systems or verify themselves as workers. Drivers for Uber Technologies Inc., for instance, must regularly prove they are licensed account holders by taking selfies on their phones and uploading them to the company, which uses Microsoft Corp.’s facial-recognition system to authenticate them. Uber, which is rolling out the selfie-verification system globally, did so because it had grappled with drivers hacking its system to share their accounts. Uber declined to comment.

Amazon.com Inc. and smaller vendors like Idemia Group S.A.S., Thales Group and AnyVision Interactive Technologies Ltd. sell facial-recognition systems for identification. The technology works by mapping a face to create a so-called face print. Identifying single individuals is typically more accurate than spotting faces in a crowd.

Still, this form of biometric identification has its limits, researchers say.

…

Analysts at credit-scoring company Experian PLC said in a March security report that they expect to see fraudsters increasingly create “Frankenstein faces,” using AI to combine facial characteristics from different people to form a new identity to fool facial ID systems.

The analysts said the strategy is part of a fast-growing type of financial crime known as synthetic identity fraud, where fraudsters use an amalgamation of real and fake information to create a new identity.

Until recently, it has been activists protesting surveillance who have targeted facial-recognition systems. Privacy campaigners in the U.K., for instance, have painted their faces in asymmetric makeup specially designed to scramble the facial-recognition software powering cameras while walking through urban areas.

Criminals have more reasons to do the same, from spoofing people’s faces to access the digital wallets on their phones, to getting through high-security entrances at hotels, business centers or hospitals, according to Alex Polyakov, the CEO of Adversa.ai, a firm that researches secure AI. Any access control system that has replaced human security guards with facial-recognition cameras is potentially at risk, he says, adding that he has confused facial-recognition software into thinking he was someone else by wearing specially designed glasses or Band-Aids.

…

Spoofing a facial-recognition system doesn’t always require sophisticated software, according to John Spencer, chief strategy officer of biometric identity firm Veridium LLC. One of the most common ways of fooling a face-ID system, or carrying out a so-called presentation attack, is to print a photo of someone’s face and cut out the eyes, using the photo as a mask, he says. Many facial-recognition systems, such as the ones used by financial trading platforms, check to see if a video shows a live person by examining their blinking or moving eyes.

Most of the time, Mr. Spencer says, his team could use this tactic and others to test the limits of facial-recognition systems, sometimes folding the paper “face” to give it more perceived depth. “Within an hour I break almost all of [these systems],” he says.

So basically, these biometric facial ID systems that are popping up everywhere do not do the thing they are supposed to do at all, as the only people who need to be face-scanned in theory are criminals. The entire claim of the biometrics industry is that it is to prevent fraud.

Of course, this technology was already largely pointless in terms of verifying people’s faces on the internet, as it was advertised as something that was going to prevent hacking, when in fact any hack of the kind they were claiming they were going to prevent bypasses these systems.

The only possible conclusion then is that it is not actually supposed to prevent fraud, but is to gather the information of non-criminals for mass surveillance purposes, acclimate people to submitting to these systems, and to further dehumanize normal people.

All of these technologies they’re coming out with are the same.

Do you think it won’t be easy to bypass the supposed security protections of implantable microchips?

These people are creating a dystopia on purpose – not because it is practical.

Daily Stormer The Most Censored Publication in History

Daily Stormer The Most Censored Publication in History